My most recent task in new job was configuration of RAID with 3x SDDs and streaming of data to network. It has shown that fast and stable reading from RAID is difficult problem.

First, I spent some time watching videos with Brendan Gregg. His talks are really inspiring. Thanks!

I have used DPDK to stream data via ixgbe interface and simple bash script to count bytes in /proc/net/dev. Unfortunately, we have found very few data on the other side of the wire. DPDK does just reading from file and streams it to network.

It have two steps. Reading from file and streaming to network. It has shown, that reading from file is a problem. In this case, I am interested just about sequential read.

The nmon and IBMs nmon analyser provides good point to start:

nmon -ft -s 10 -c 8640 -m /tmp

However, you need Microsoft Excel which is unlikely to be installed on my computer. Fortunately, I can work with bash, sed, grep, and gnuplot. Few minutes of coding and few hours of testing provided following picture.

while [ 1 ]; do

dd if=/mnt/ssd/one_terabyte of=/dev/null bs=16M;

done

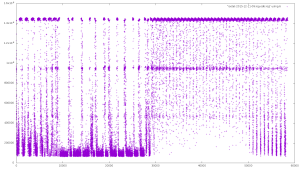

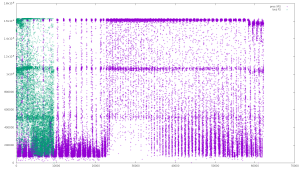

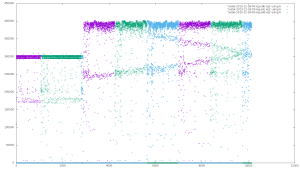

On the X-Axis is time in seconds, on the Y-Axis is iostat’s “kB_read/s”. On the right side you can see, the performance is about 1400 MB/s i.e. 11.2 Gbps in average. There are very few crosses on the bottom side. The speed of read is very low (about 1 Gbps) on the left side of picture.

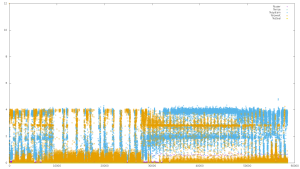

The utilization of CPU is most probably not source of problem. Following picture shows that process is in iowait state. I have disabled all cron tasks to be sure.

I have tried to read directly from disk without the file system (green color). It has shown, that it looks very similar to XFS (purple color).

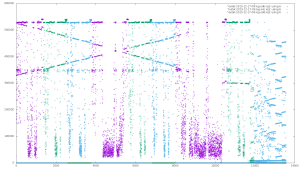

Finally, I have removed disks from RAID and tried to read from HDD directly. In the following picture, color represents disk. The last blue part is affected by smartctl. I have tried to read temperature every second, but it have very negative effect on performance.

while [ 1 ]; do

dd if=/dev/sdb of=/dev/null bs=16M;

dd if=/dev/sdc of=/dev/null bs=16M;

dd if=/dev/sdd of=/dev/null bs=16M;

done

The temperature of air was between 50 and 70 °C all the time.

It is clear, that this is not problem of the RAID, but problem of the HDDs, Controller, Motherboard, or its compatibility. I have added cpipe to decrease the speed. There is very interesting pattern making an cross. Again, I am not able to say what is a reason of it. As you can see, the maximum speed of single HDD is 500 MB/s. I have tried also 300 MB/s and 400MB/s. The 300 MB/s looks reliable.

dd if=/dev/sdb bs=4M |cpipe -b 32768 -s $SPEED_LIMIT| dd of=/dev/null bs=4M

During the testing I have found a couple of interesting settings.

It is better to use NOOP scheduler for SSDs. It is default in Fedora and CentOS 7 if I am not wrong.

$ cat /sys/block/sdb/queue/scheduler

noop [deadline] cfq

The XFS should have better performance (by 10%). Some benchmarks says this, but I have no reference for this.

I want to minimize number of writes to ssd using “noatime, nodiratime”.

$ tail -n 2 /etc/fstab

/dev/sdb1 /mnt/ssd xfs rw,noatime,nodiratime,nofail 0 0

There could be fragmentation on XFS. It could be fixed:

xfs_fsr /mnt/ssd

I am not sure which parameters are the best for mkfs.

I should drop the caches before tests. In my case it is not so big problem, because disks had 500GB and I was reading whole content. This cannot fit into cache.

sync ; echo 3 > /proc/sys/vm/drop_caches

I can set read ahead attribute.

blockdev –setra 65536 /dev/sdb

I can modify queue depth, but I am not sure what settings is best for my case.

cat /sys/block/sdb/device/queue_depth

I should add the hardware description:

- Dell R430

- PERC H730 Mini

- 3x Samsung EVO 850 SSD 500GB

I was using Dell with OMSA tools. For raid I was using default values, but stripe size was 512

# /opt/dell/toolkit/bin/raidcfg -vd

Controller: PERC H730 Mini

VDisk ID: 0

Virtual Disk Name: SSD

Size: 1429248 MB (1395 GB)

Type: RAID 0

Read Policy: Normal Read Ahead

Write Policy: Write Back

Cache Policy: Unchanged

Stripe Size: 512

Drives: 0:1:1,0:2:1,0:3:1

BootVD: Yes

T10 Protection Info: Disabled

Encrypted: No

The PERC H730 should have performance up to 12Gbps. Each SSD should have performance 4Gbps. I know that RAID-0 doesn’t make sum of speeds because there is some overhead. Although I didn’t expected such results.

I will be happy for any advice.

![]()

[…] Performance of sequential read […]

[…] Performance of sequential read […]